Part Three: Our Obligation To Listen When A Voice Is Used

What limits do we set ourselves to be happy, successful, and maintain productive boundaries between the areas of our lives?

Key Points:

The synthetic feeling of keeping someone alive inside of a period of grief may have aspiration to ease the pain of loss, but it also carries the ethical risk of prolonging grief itself.

Grieftech runs on the personal disclosure of intimate life stories and thoughts, preserved for survivors, and is extremely empathic in substance and intention for loved ones. Anonymous engagement by contrast is temporal, where grieftech aspires to endure.

Those not in control of the advantages are those for whom the product is intended - those who survive. Those who pay the monthly bills, but don’t host or own the data. These are the individuals who must be protected in matters of ethical conflict and permissions negotiation.

‘We must recognize that it is not just a polite gesture to assure our informant-colleagues that they have a voice in what we are doing; we are obligated to listen to that voice when they use it’ (Toelken, 1998)

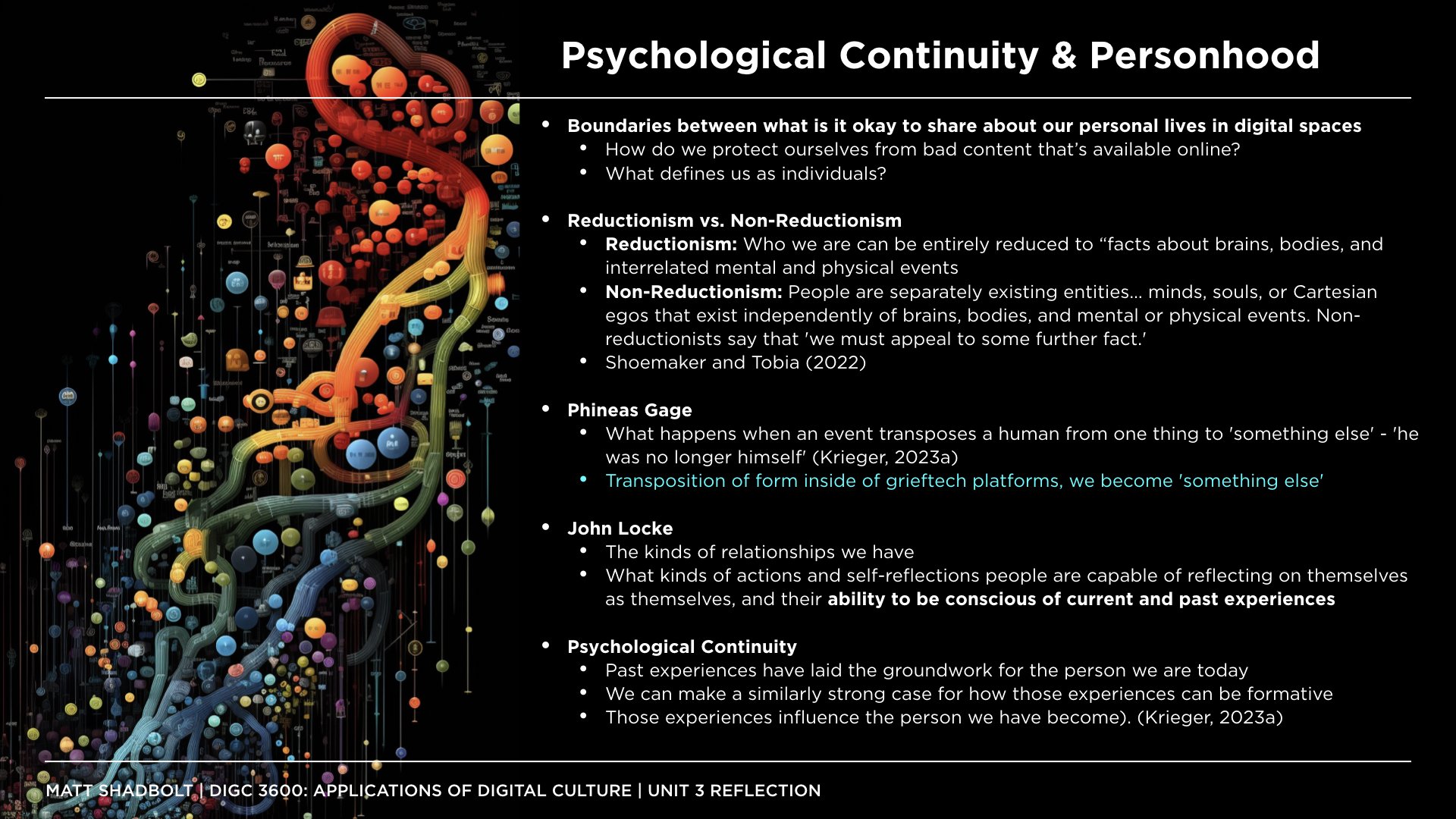

Psychological Continuity & Personhood

There’s a lot which happens in the space between a person and a screen. And the boundaries we set for ourselves, are set by others, and the protections we enforce upon others define our agency, and our digital personhood. How do we protect ourselves from bad actors or those who would seek to do us harm? How much do we really feel comfortable revealing about ourselves to strangers? What are the unintended consequences of hitting ‘submit’? Many of these ideas coalesce inside of grieftech platforms, which can often feel intensely intrusive in their harvesting of our personal stories.

Non-reductionist thinking motivates that ‘people are separately existing entities, minds, souls or Cartesian egos that exist independently of brains, bodies, and mental or physical events’, non-reductionists argue that 'we must appeal to some further fact.' (Shoemaker and Tobia, 2022). That there is something beyond just our biological makeup which makes us… us. An essence, a soul, a unique way of being in the world. This is the oil upon which grieftech platforms run, and the primary atomic unit of content is the human story.

The personal, intimate event, recalled from memory as a cherished experience which gets preserved in code for future recall and remembrance. It’s the unique value proposition of these platforms in being able to capture these emotional moments, and generate the experiences of hearing them, seemingly for the first time, in conversational bot form for loved ones who survive. But in doing this, the individual needs to change from physical to digital. They need to become something else. Something which is no longer ‘them’.

With echoes of Victorian-era railroad engineer Phineas Gage, whose skull was impaled by an exploding metal rod and lived, but as a radically different person from the one he was before, we become ‘no longer ourselves’ (Krieger, 2023a). But that doesn’t mean that digital platforms don’t try to create the emotional feeling that it’s the same person. It looks like them, it sounds like them, but it’s missing the essence of an individual, even if it’s telling us a story unique to them. We know it’s a synthetic representation, but like so many other behaviors online, we know it’s all code, but we do it anyway.

These kinds of self-reflections, and our ability to be conscious of current and past experiences, explored by philosopher John Locke, help us navigate how what’s come before informs what happens next. We’re already aware of how prior digital behavior informs recommendation services and engineered attention, but it’s not exclusive to digital spaces. This psychological continuity lays the groundwork for the people we are today. The formative experiences which influence and shape the person we’ve become over time (Krieger, 2023a). This is the content grieftech platforms are attempting to bottle. The remembered past which gets preserved for future recall by others, and mediated through a commercial digital host.

Anonymity

We can’t have a conversation about the deep harvesting of individual memory without discussing its opposite, digital anonymity. As of 2013, among people in the US, 86% of internet users had already taken steps online to either mask or remove their digital footprints, whether that meant clearing cookies in their browsers, avoiding using their names on virtual networks, or encrypting their email (Rainie et al., 2013). With growing distrust of other people’s adherence to social norms, and the ability to safely hide behind an anonymized screen name, trolling, doxxing and flaming have become rampant, especially in social spaces. This online disinhibition, where users do or say things they would not say or do in offline contexts, leads to feelings of lesser restraint, more freedom to say what’s on one’s mind, and to express their true feelings about the world (Krieger, 2023a).

A psychological disorder in its more extreme forms, empathy deficit, where people lack the ability to feel, understand, and resonate with another person’s feelings, is in many ways the inverse of remembrance efforts inside of grieftech products. Grieftech runs on the personal disclosure of intimate life stories and thoughts, preserved for survivors, and is extremely empathic in substance and intention for loved ones. Anonymous engagement by contrast is temporal, where grieftech aspires to endure (Stern, 2020). But the important thing to note is that they are two sides of the same coin of disrupting existing social norms. What is acceptable in anonymous behavior is culturally determined, as is our relationship with the sharing of intimate personal stories after death. Both are non-traditional forms of communication, both transcend cultural norms of appropriate behavior, and both reshape what we mean by a person’s essence and identity.

Permissions Of Grief & Restoration Of Life

But what’s acceptable to share about another person when they are no longer there to give their own, explicit permission? What if they’ve given permission in perpetuity as part of a digital will, or donated their likeness for public use? The internet has ‘taken our values and given us new horizons in which we need to be aware of, and connect with, the value systems of other people’ (Krieger, 2023b). For example, grief in western cultures is one which is highly respected, often private, and one where we don’t have many formal practices around public or social means of grieving. In emerging digital spaces, grief is more positioned from a remembrance perspective of augmenting happiness and nostalgia, rather than reducing sadness. It’s ‘remembrance reinvented’ rather than an explicitly determined system for coping with loss.

But importantly, these rituals of remembering aren’t anything new. They’re culturally determined and the means by which we cope with death is as old as death itself. In Ancient Egypt, writing or speaking the name of someone who is dead restored them to life. And in digital spaces the same holds true where sharing a person’s voice keeps them alive and part of our world long after they have physically died and as long as the server which holds them is running (Krieger, 2023b). but in many cultures the opposite is true, where it’s forbidden to utter the name of a dead person, particularly in front of their relatives. Grieftech transgresses these silent vocal boundaries of death, the boundary here being our understanding of being alive or not. The synthetic feeling of keeping someone alive inside of a period of grief may have aspiration to ease the pain of loss, but it also carries the ethical risk of prolonging grief itself. That without the firm closure offered by a physical passing, we are simply extending our mourning well into the future, which carries its own psychological weight and consequence. Bina Venkataraman, writing in The Washington Post, puts it best when she argues:

”These ghosts need not pretend to any starring role among the living. Better that they make only cameo appearances. After all, no one wants a future in which the dead fail to exit stage right. The quest for immortality is ancient, and it’s starting to look its age — no matter what new technology dresses it up. A truly radical future would be one where people no longer try to live forever, where loss is accepted and the living are well taken care of — where we live, let die and make room for the new.” (Venkataraman, 2023)

Toelken's Yellowman Tapes

We’ll end again with a real story. Folklorist Dr. Barre Toelken collected Navajo stories, from one specific community member, over 43 years. Over the years, and with resonant echoes in grieftech’s content uploading, he recorded stories and oral histories which had intimately specific cultural dimension. Stories central to Navajo systems of belief, and within which only certain culturally-determined people could hear. But over time these recordings began to be problematic. Who could hear them, who Toelken could play them to, and issues of rights, permissions and ownership, especially as Toelken grew older, began to motivate Toelken to address the concerns around the recordings he’d harvested as his life’s work. Even those the person interviewed had given their permission, what should happen to these documents after his death? (Krieger, 2023b).

Toelken’s response was both empathic and sensitive. His perspective stated that ‘folklorists stand to learn more and do better work when scholarly decisions are guided by the culture we study, even when taking this course causes disruption in our academic assumptions' and to avoid situations ‘where the disposal of materials embody dangers to researchers, to the natural world, and to themselves' (Toelken, 1998). The same holds true for issues of unintended consequence in grieftech products. As Toelken concludes, ‘while overt exploitation did not take place, one party in this contract enjoyed inherent advantages by virtue of controlling the infrastructure and the output, it is instructive to recall that in contract disputes, a mediator usually resolves issues in favor of the party that was not in control of the advantages’.

The digital questions for grieftech here subsequently become about control and advantage. Who stands to gain the most? The person creating the content? The organization hosting it? Or the end user for whom the work was intended in the first place? These questions are deeply subjective and highly complex, but I’m going to come down on the side of the organization facilitating the capture of the data being the one which enjoys the most advantages of such an exchange. They stand to benefit financially from the recurring monthly payments, from the individualized data they’re able to use to build better and more competitive products, and the market equity and habituation they build through the end user. If we hold this to be true, those not in control of the advantages are those for whom the product is intended - those who survive. Those who pay the monthly bills, but don’t host or own the data. These are the individuals who must be protected in matters of ethical conflict and permissions negotiation. In Toelken’s words, ‘we must recognize that it is not just a polite gesture to assure our informant-colleagues that they have a voice in what we are doing; we are obligated to listen to that voice when they use it' (Toelken, 1998).

References

Krieger, M. (2023a). Unit 3.1 Digital values – extending the conversation around privacy from social identities to personal identities (10:26). [Digital Audio File]. Retrieved from https://canvas.upenn.edu/courses/1693062/pages/unit-3-dot-1-digital-values-extending-the-conversation-around-privacy-from-social-identities-to-personal-identities-10-26?module_item_id=26566563.

Krieger, M. (2023b). Unit 3.2 Values and being an individual, cultural values around human life and the Yellow Man Tapes (12:38). [Digital Audio File]. Retrieved from hhttps://canvas.upenn.edu/courses/1693062/pages/unit-3-dot-2-values-and-being-an-individual-cultural-values-around-human-life-and-the-yellow-man-tapes-12-38?module_item_id=26838825.

Rainie, L., Kiesler, S., Kang, R. & Madden, M. (2013). Anonymity, Privacy, and Security Online. Pew Research. Retrieved from: https://www.pewresearch.org/internet/2013/09/05/anonymity-privacy-and-security-online/.

Shoemaker, D. & Tobia, K. (2022) Personal Identity. The Oxford Handbook of Moral Psychology. Oxford Handbooks (2022; online edn, Oxford Academic, 20 Apr. 2022). Retrieved from: https://doi-org.proxy.library.upenn.edu/10.1093/oxfordhb/9780198871712.013.28.

Stern, J. (2020). How Tech Can Bring Our Loved Ones to Life After They Die | WSJ. YouTube.com. [Digital Video File]. Retrieved from: https://www.youtube.com/watch?v=aRwJEiI1T2M.

Toelken, B. (1998). The Yellowman Tapes, 1966-1997. The Journal of American Folklore 111. [Digital File]. Retrieved from: https://www.sas.upenn.edu/folklore/center/libro_nov30.pdf.

Venkataraman, B. (2023). Look out. AI ghosts are coming. The Washington Post. Retrieved from: https://www.washingtonpost.com/opinions/2023/06/22/ai-avatar-death-ghost-future/.